Why does data quality matter?

“Data is the new oil” and both have many similarities

Data is a valuable asset. If you look at the top 10 largest companies in the world, most of them are technology companies that derive their value purely from data (like Google or Meta), or the products and services they offer depend on or are enhanced and improved with data (Apple, Microsoft, Tesla).

Like crude oil, raw data has a lot of value, but we must process it to extract its real value. If we compare the price of crude oil with the cost of average premium gas in the US, we have a 2.4x difference.

Similarly, we can get greater value by cleaning, processing, and validating our data, using it in analysis and business intelligence, and even more if we use it to feed machine learning models to “predict the future”.

Quality matters

When I was working as a consultant, we visited one of the top paper factories in Latin America, located in Colonia, Uruguay. The factory is incredible, and the technology behind every process is outstanding.

What matters the most for them is the wood, the raw material they use to create their product. They only accept one specific specie of tree because of the composition and how that fits into their process. Having problems with the raw material means spending more chemicals or even breaking one of their costly machines.

That’s an extreme case, but it’s similar to what happens with the quality of the data that companies use to make decisions.

In computer science, garbage in, garbage out (GIGO) is the concept that flawed or nonsense (garbage) input data produces nonsense output. Wikipedia

Here is where data quality matters. Many processes depend on data. Everything that matters to the decision-making process, from business intelligence to KPIs, metrics, and machine learning models, relies on data quality.

If we feed a report, dashboard, or model with poor data quality, we will have erroneous (potentially dangerous) information to make decisions.

The cost of bad decisions

Poor data quality destroys business value. Recent Gartner research has found that organizations believe poor data quality to be responsible for an average of $15 million per year in losses. Gartner

Poor data quality has a lot of impact if we use data to make business decisions (we should, in most cases). We tend to think of monetary impact, but there’s way more than that, and some are even worse.

Employee satisfaction. Imagine you conducted a simple survey and failed to process the data correctly. You can end up messing up the relationship with your employees. Or maybe you have a financial report with failures, and you decided to layoff a group of people that wasn’t necessary, impacting your operations.

Product success. You failed to analyze the result of an A/B test or tested the product with the wrong set of clients, and your product isn’t up to your customer's expectations.

Customer satisfaction. Have you ever felt that a company has been doing wrong with its marketing? Maybe you received a duplicated email, a product recommendation that wasn’t even close to what you wanted, or any other source of “frustration”. It’s not real frustration, but that feeling of “they should have done better with all the information they have from me” (banks are the worst at this, at least in my country).

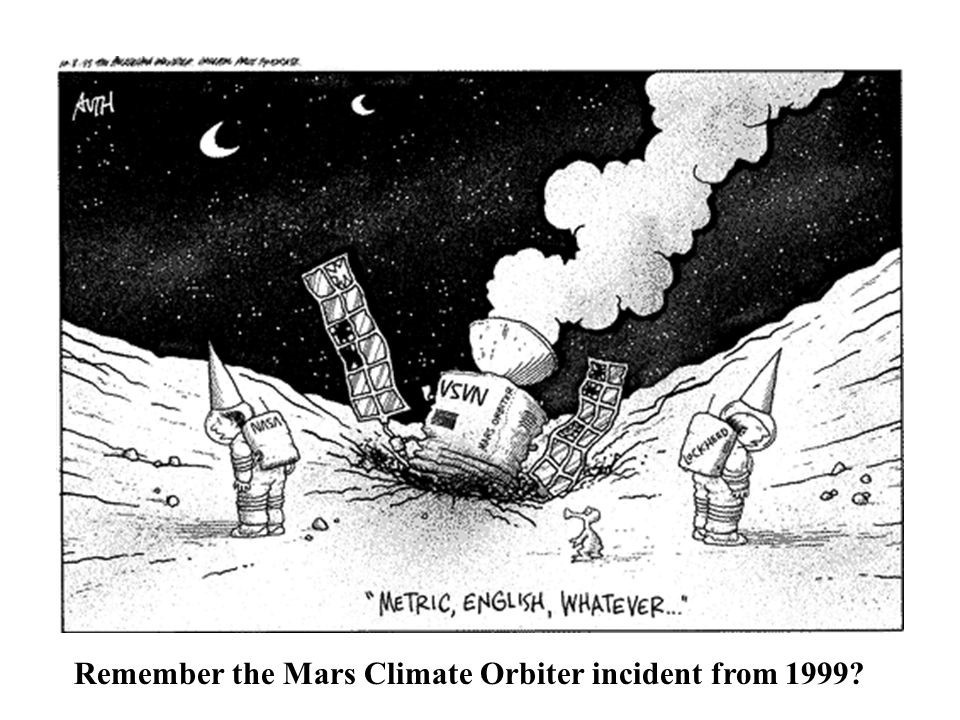

Financial. I didn’t know about this case until recently, but in September of 1999, after almost 10 months of travel to Mars, the Mars Climate Orbiter burned and broke into pieces. Do you know why? Difference in units. Sounds silly, right? Yes, but it’s real, and the difference between the metric and imperial systems cost hundreds of millions (the cost of building the robot was $125 million). You may know about other cases, but sometimes simple data transformations cost a lot of money.

Final words

I want to write a few more words, depending on your role and background.

If you’re a business person or decision-maker, have in mind the following things:

Data quality will never be perfect, but that shouldn’t prevent you from using data to make decisions.

Trust again after finding issues and getting them fixed. You must have a great team, allow them to fix data issues, and trust the data again. There will be problems, but a lack of trust won’t help.

Everyone is responsible for data quality. You can’t blame one team or area. Make sure you promote fixing things and owning the problems each part has. The data team is not responsible for fixing everything.

For data engineers:

Make sure you have the right tools to detect data quality issues.

Data testing is a hard problem.

Help other teams to be responsible for the data they produce.

Document your data issues and solutions.

Thanks for reading! If you enjoyed the content, please consider subscribing.